Above: Corroded hard drive. Photo by Mark Lyndersay.

BitDepth#1453 for April 08, 2024

A week ago, the world celebrated Backup Day on March 31. And by celebrate, I actually mean ignored like every other day, while vaguely hoping that nothing too bad will happen to their computer. Or smartphone. Or tablet.

The only people who might actually raise a toast to World Backup day are the computer users who have a backup system in place and they’ll take a quick sip while will double-checking their gear in a fit of paranoid worry.

But this is 2024, you might be asking, isn’t all that just happening in the cloud? Well, sometimes it is and sometimes it isn’t.

There are three layers to any backup strategy.

- Where will the data get backed up?

- How will the backup happen?

- How will I restore my data if something terrible happens?

Unfortunately, far too many users stall out on the first question, formulating a vague plan to, for instance, copy everything to a flash drive, or work off Dropbox (or any equivalent cloud based system that can be mounted on the computer desktop).

When there is terminal data loss, it’s not unusual to discover that critical files aren’t in the backup regimen. Also daunting is the time-consuming task of recreating a computer’s boot drive, which tends to take shape over time, evolving as the device is customised to taste.

There are many hidden configurations and authorisations on a boot drive that aren’t accessible to data level backup tools. The only way to preserve a full system state is to create a bootable clone.

A good baseline for backup is to create a cloned boot volume on one drive and a versioned backup of your data files on another.

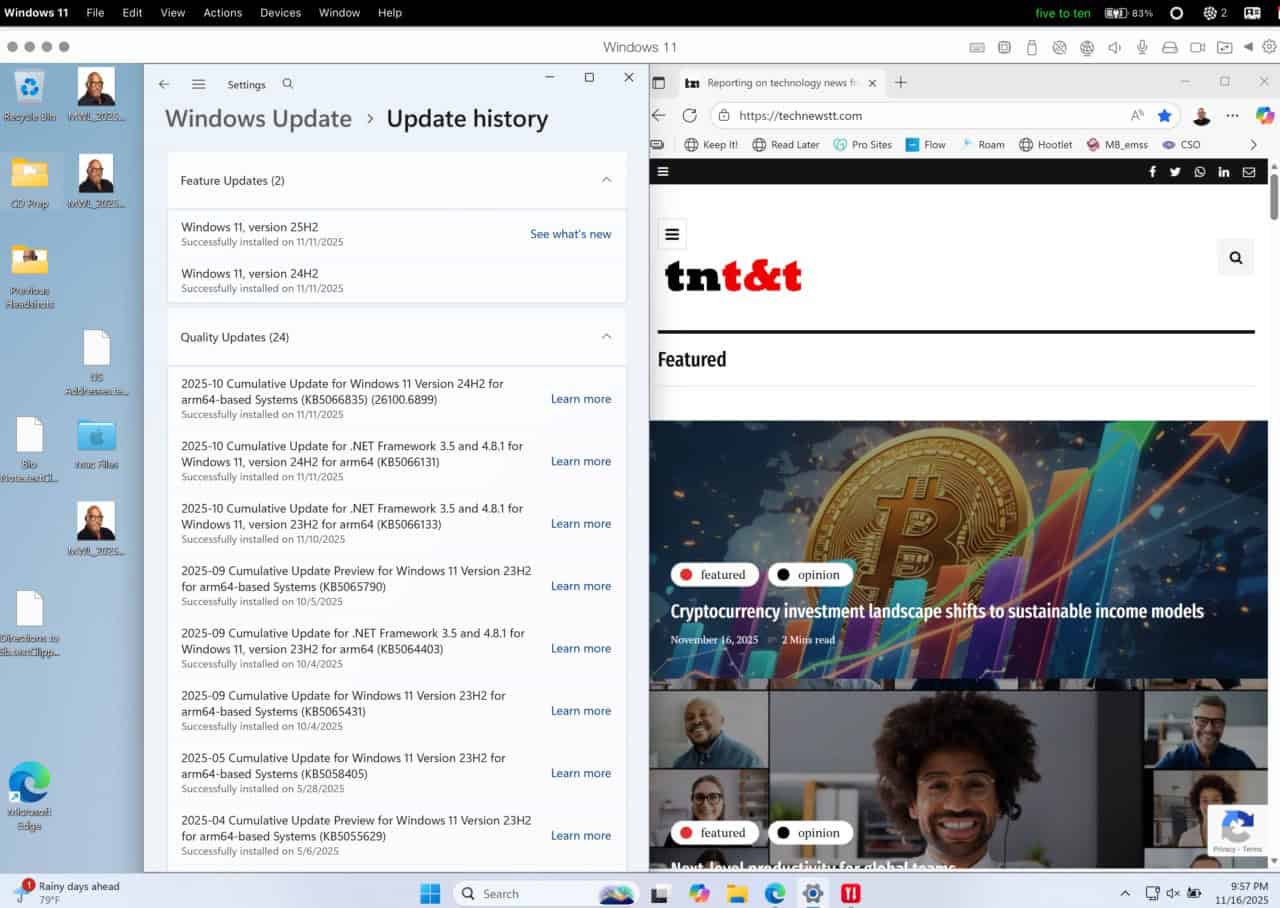

Versioned backups usually require third-party software to implement on Windows. MacOS has a useful tool built-in, Time Machine, while File History and Windows Backup can be configured to do somewhat equivalent work on PCs on Windows 10 and 11.

(Actually, while doing some updating of my understanding of recent backup systems, I discovered that Time Machine can do backups to networked drives – after a bit of fiddling).

A versioned backup continuously updates the backup of your data to reflect changes made over time. That’s particularly useful if you want to recover the version of a file you created two months ago, which has since been radically changed.

Consider using two drives for your versioned backups, rotating them on a schedule to defend against physical damage, theft or malware infection. It’s not an infallible system, but it’s better than relying entirely on the sanctity and robustness of your computer’s hard drive.

A good rule to follow is to have, at minimum, one backup destination for each backup system you deploy.

An effective data retention regime won’t work if you depend on your personal diligence. Enthusiastic beginnings always get bogged down by the absolute dreariness of sitting at a computer moving files around.

It’s also easy to make errors, replacing files with the wrong versions or worse, defeating the goal of backups.

Acronis is a brand that continuously tops lists of Windows based backup options and the company has a well-regarded suite of tools for enterprise, which often guarantees that useful features leak down to small business and home users (how else are they going to persuade you to upgrade?}

If your datasets are small, it might be possible to create backups in the cloud using software like Google Drive (15GB, inclusive of your Gmail), Box (10GB) Dropbox (2GB) or One Drive (5GB), but once your data begins to grow, it’s easy to find yourself being pushed into a paid category of use, which might become more expensive than investing in media and software to manage data safety.

If you use a cloud system, then try to use two.

Users with large datasets may want to consider online backup services that scale more affordably, such as BackBlaze.

Cloud systems are the easiest way to backup a smartphone or tablet, but moving your bits onto secure media that you own should be part of any successful strategy.

Personally, I use all of it. The first draft of this column is being written far from home base on a smartphone and is being automatically being uploaded to cloud servers when I save.

Once you have a strategy in place with software and hardware identified choose a connection between your computer and external media thats the fastest one your computer will support.

Today, that’s either USB v3.2 (20GB/s), USB v4 (40 GB/s) or Thunderbolt (40 GB/s). Lots of drives and cables are rated for USB 3.1 Gen2, but that only transfers at 10 GB/s.

As long as your computer has a fast, modern port, match your backup drive and cable connection to that capacity. The one thing you do not want is slow backups.

Even across the fastest possible direct connections, moving a terabyte of data takes time.

Most backup software will allow you to set a schedule (incremental backup systems work best with the backup media connected to the computer and live). If the destination media is not connected, good software will prompt the user to attach it so it can proceed with the scheduled backup.

The next critical step is the establishing the viability of a restore. You must test the restoration of data from your chosen backup system.

That’s a core test, but you also need to consider what you are backing up and where.

How much data can you afford to lose? I’ve known of situations where people only discovered the value of their data when they found out how necessary it was to their operations, how much it costs to recover it from failed media and how difficult it is to reconstruct it by requesting it from disinterested third parties.

“Can you send me back that file” gets really old really fast,

Even when you have a backup system in place, it’s not done; it’s the start of a journey.

Computers have a functional life of around five years, and most media will last roughly that long before either becoming more prone to failure or simply running out of room.

Expect to rotate backup media after five years. It’s perfectly fine to repurpose old drives for less critical work, but as your digital archives grow in size, migration to newer media becomes an increasingly challenging aspect of data preservation.

After five years, the gap between media speeds and connections tends to grow even wider and migration always happens at the speed of the slowest device in the chain.

Trying to move data off a 2x speed CD disc in 2024 is almost unnervingly slow, particularly if error checking kicks in on the disc. Doing it over USB2 (60 Mb/s) is classifiable as a kind of torture.

It’s a simple, remorseless reality. Delaying the creation of a backup and data migration regime doesn’t just increase the risk of terminal loss, it also makes the process more difficult and sometimes, almost impossible.

[…] Caribbean – A week ago, the world celebrated Backup Day on March 31. And by celebrate, I actually mean ignored like every other day, while vaguely hoping that nothing too bad will happen to their computer. Or smartphone. Or tablet… more […]