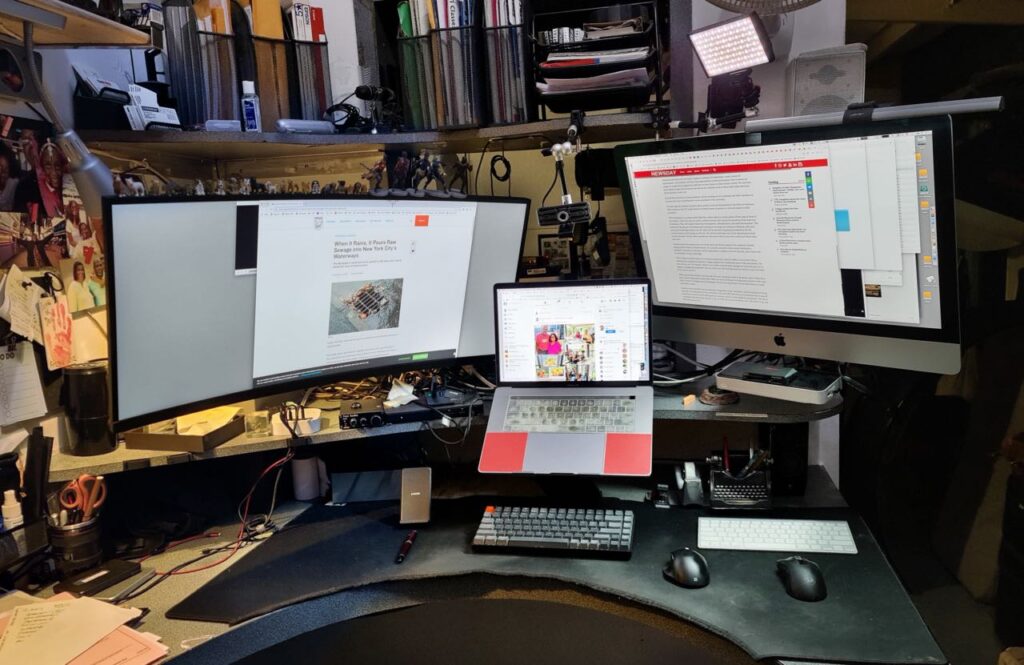

Above: The full stripdown of my desk to table top and cables to re-egineer the space and layout. The wall mount arm at right has already been moved and remounted. All photos by Mark Lyndersay.

Readers of the BitDepth column will be aware of the catastrophic failure of my imaging workstation in March, the initial evaluation of the situation and the steps I planned to take to get the system back up and running again.

The background

In the interests of completeness, this update details what actually happened when I began putting the components of the system back together.

The system that failed was a 2009 model MacPro tower, one of the so-called “cheese-grater” systems and a significant slab anodized aluminium on its own.

That line of computers, which came to an end in 2013, was the most expandable computer system that Apple ever engineered except perhaps for the IIci, an ugly little beige box into which I crammed everything from RAM chips on risers to increase capacity to an early PowerPC 604 chip on an adapter card.

The MacPro tower had had a fine run from December 2012 to March 2022, running 24/7 except for extended power outages and restarts for updates.

When it went down, it had been gutted to accommodate seven separate drives, including two SSDs, one of them on a PCI adapter card.

What replaced it

The one governing parameter on the replacement machine is that it be capable of running an earlier version of the Mac OS, version 10.13, High Sierra.

The replacement was a 27 inch iMac 17,1 (late 2015 model) running a quad-core Intel i7 chip sourced from Other World Computing along with drive cases to handle the drives that I’d need access to.

The iMac ships in a box that you’d normally expect to find a large flat-panel TV in and that causes shipping costs, based on dimensional weight to be quite expensive.

I took the option of free shipping to my Miami Skybox address, avoiding a shipping fee that was roughly ten per cent of the cost of the machine itself, adding a eight days to the shipping time, but there was no avoiding the dimensional weight charges between Miami and Trinidad.

OWC offers upgrades on these second-hand systems before shipping, so I upgraded RAM and requested an SSD as the internal drive.

The systems moving through their used computer services are constantly changing. I ended up cancelling the first order I made when an hour later an even better qualifying system appeared online, but had to give up on the site after that second order, because it seemed like the ideal machine would be a constantly moving target.

The hardware

The i7 chipset would prove to be generally adequate, but not quite in the class of the quad-core Zeon chips that were in the significantly older tower.

Some of the slowdowns I’ve experienced might be the result of the built in graphics card, a 2GB Radeon M395 (it is Metal capable though) that drives the external monitor I sometimes use with the system, but doesn’t have the GPU overdrive of the RX580 I put in the tower system.

I did grit my teeth when on an idle browse through the used computer listings, I found an iMac with an 8GB RX580 GPU, but decided it was probably best to just stop looking at fruit I wasn’t going to be able to pick.

Dance with the date you brought to the party and all that. Also, I stopped looking at the listings.

Software issues

On arrival, the Mac booted without issues, but any attempt to run an installer kept invoking a remote access request to Blizzard Entertainment, which offered a hint of where it might have spent its earlier life.

To completely nuke the system and eliminate any residual dependencies prior to cloning my previous OS from the tower over, I resorted to an internet based drive scrubbing using Apple’s online diagnostics boot. Slow, but thorough.

Once the cloning was done, I had a bootable OS that I needed to reconnect with multiple services and authorisations. Unfortunately, one half of the reason I stick with this particular version of the OS, Lightroom, didn’t make it through the process.

Until the crash, I’d been running Lightroom 6 and Photoshop CS6, the last non-subscription versions of Adobe’s image editing software.

Because the system went down suddenly, I could not deactivate either software and it turns out that Adobe is being quite sneaky about allowing users of their fully licensed software to remove activations.

In short, you can’t. There is no option to do so for anything other than subscription software.

I had one activation left for Photoshop, but none for Lightroom and no option to get around this hurdle. Adobe’s support doors for its legacy products is firmly and irrevocably shut.

For at least two years, I’d been auditioning replacements for both products, but habit and muscle/mouse reflex are tempting to fall back on. So while I owned Capture One, it took a completely non-functional Lightroom to push me to adapt to a new system.

Capture One is a quite different beast, but it does import an existing Lightroom catalog without any fuss. You don’t, for instance, get flags as a rating indicator, so some metadata doesn’t transfer and my methodology for rating and ranking images has had to change a bit.

That aspect of the process is still ongoing. Fortunately this system is upgradeable all the way to MacOS 12, Monterey, so at the point that I finally decide to leave 32 bit software behind, I can jump most of the way to a current version of the OS.

Additional hardware

Because the hardware was going to be quite different, engineering the desk layout of this new mix of bits and pieces demanded that I strip everything down to laminated desk surface and mostly bare walls.

I’m quite a fan of the adaptability of wall mounted monitors and this particular model of iMac does not have a VESA mount option.

I sourced a moderately priced third-party VESA adapter that’s designed to clamp onto the aluminium stand of the iMac, but soon discovered that the wall mount was bolted too low for the iMac with the stand and would have to be moved, a not insignificant undertaking involving expanding bolts and really big drill bits.

The iMac supports USB3 and Thunderbolt 2, which I expanded using an older OWC Thunderbolt dock and USB hub.

There are two Thunderbolt ports available, one of which drives the external monitor using DisplayPort technology off the dock and the other is connected directly to a two-bay Thunderbolt 2 drive box for the storage I need to access at full speed.

A second four-bay USB3 drive box holds drives that are less frequently accessed and will run fine on the 5gbps access speeds of direct USB connections.

The Thunderbolt 2 dock gives me one Firewire 800 port to connect my infrequently accessed drives in a third, older four-bay drive box as well as a drive bay for removable drives.

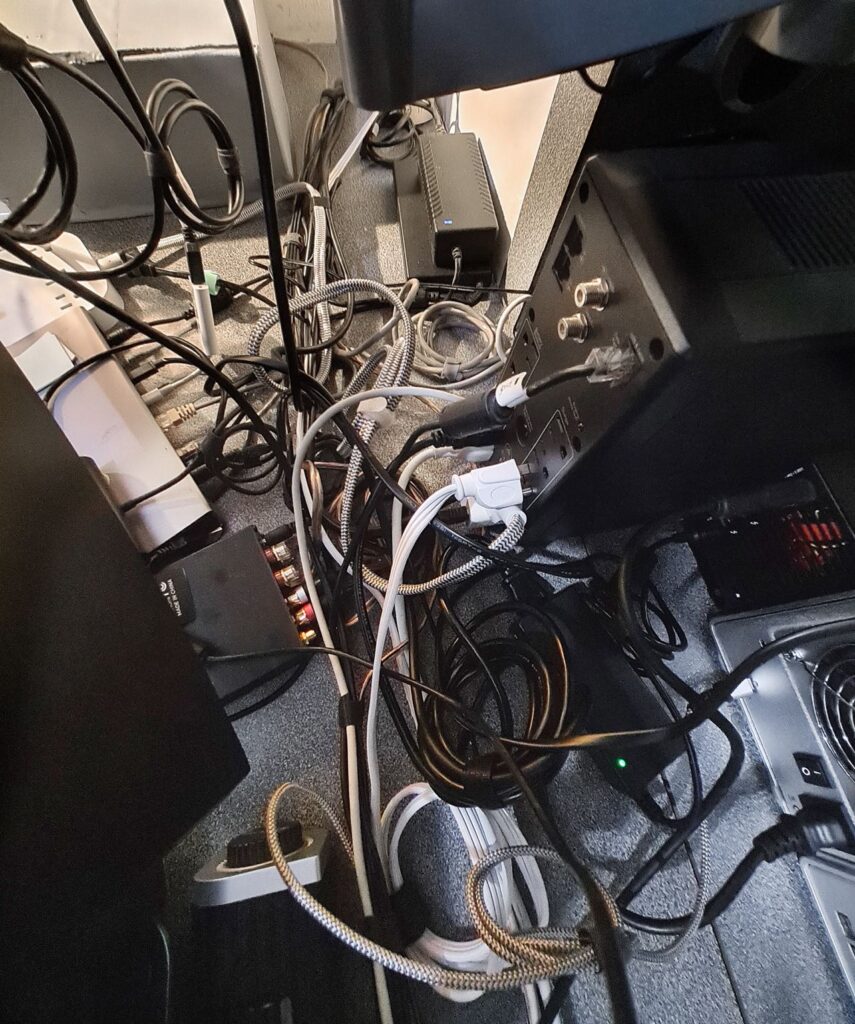

Wires everywhere

All of the drive connections were internal on the tower, but now each device required cabling across the desk to transfer both power and data.

I spent at least two hours sorting out the cable runs and then creating two (still somewhat messy because of the branching required by multiple devices) cable bundles that group power cables together and separately, as far as possible, from the data cable bundle.

There’s nothing quite like the frustration of running a cable, carefully bundling it every six inches or so with a velcro strip, and then realising it’s two inches too short and must be replaced. Good times!

Back to normal. Mostly.

The crazy thing is that now that things have settled down, the setup is functionally the same as it was before the crash.

So much so that a friend dropped by, looked over everything and then asked, “Where’s the new computer?”

In practice, the system isn’t quite as responsive as the MacPro it replaced, some of it due to the slower cabled connections to the external drives, some of it likely due to the drop in GPU power from the RX580, which goosed the performance of Metal optimised apps significantly.

I also suspect that single processor performance, which is where most apps spend their time, isn’t as robust on a single i7 4 ghz core as it was on a single 3.3 ghz Zeon core.

It isn’t a switch I’d have set out to make (I was really comfortable using that old tower), but the whole exercise has proven to be a lateral move, neither a step back nor forward except for the power draw, which has dropped significantly.

The tower alone drew enough power off a 1500 kva UPS that it would only run for ten minutes on battery. With the iMac, three drive cases with a total of ten drives and a Thunderbolt dock plugged in, uptime has jumped to 45 minutes, which still surprises me.

That tower drew a lot of power and reducing that draw is ultimately a good thing all round.